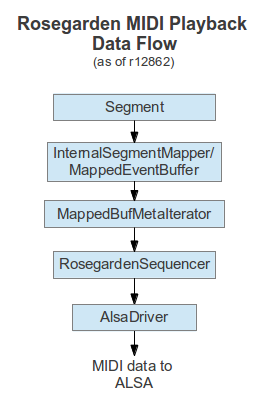

How a note becomes a sound in Rosegarden.A note is a type of Event. All events live in Segments. There are two kinds of segments, internal and audio. Events live in the internal kind of Segment. The first steps towards becoming a sound occur even before you tell Rosegarden to play. As soon as a document is loaded, Rosegarden creates (among other things) a SequenceManager and a RosegardenSequencer, which we'll get to later. The SequenceManager holds a number of what are called mappers, all derived from base class MappedEventBuffer. These include:

All mappers are made by SegmentMapperFactory. After SegmentMapperFactory constructs a mapper object, it calls a virtual function named “dump”. The name is a historical holdover from when Rosegarden was two programs. Mappers contain an array of MappedEvent. MappedEvents are lower level than notes. For instance, notes can trigger ornaments, but MappedEvents can't. MappedEvents have times in RealTime (performance time from the beginning of the composition) while notes have times in timeT (bar-wise time). Dump's job is to put MappedEvents into that array. The mappers each do it in a different way. InternalSegmentMapper iterates thru all the events in a segment and more-or-less makes a MappedEvent from each. But that's a dreadful simplification.

A lot of the conversion work is done in MappedEvent.cpp. Most event-types that have no effect on sound are just ignored. InternalSegmentMapper also contains a ChannelManager, and at the end of “dump” it tells it to find a channel interval to play on. When that's done, the mappers collectively are holding MappedEvents that correspond to all the sounds (and other sound-like events) in the composition, each tagged with the time it should play. SequenceManager is a CompositionObserver. As the user edits, SequenceManager learns about new, deleted, and altered segments, and re-dumps as needed. So those are always in sync, give or take a fraction of a second to catch up. I mentioned earlier that when a document is loaded, Rosegarden also creates a RosegardenSequencer. SequenceManager also keeps RosegardenSequencer up to date on the state of the composition. In particular, RosegardenSequencer holds a MappedBufMetaIterator, which always contains a set of MappedEventBuffer::iterators, corresponding to the set of mappers SequenceManager has. Now when you give Rosegarden the command to play, a number of things happen, but that mostly has to do with co-ordinating playing state against possible interruptions, so I'll skip right to the part where it's playing. When we're playing, we repeatedly call MappedBufMetaIterator's member function fillCompositionWithEventsUntil, telling it the time-slice of the composition that should play. We also pass it an inserter derived from MappedInserterBase. For playing sounds, we use MappedEventInserter. (We used to just pass a MappedEventList to be filled, but we needed flexibility in order to use the sound-playing logic for MidiFile output). Nowadays fillCompositionWithEventsUntil slices the time-slice further, so that segments only begin on the sub-slice boundaries. It passes each one to fillNoncompeting. fillNoncompeting figures out which mappers can possibly play during the sub-slice and turns off the others. Then it traverses all the relevant MappedEvents by executing a nested (double) loop. The inner loop visits each active mapper iterator and processes its next MappedEvent. Even if the MappedEvent would have started before this slice, it is processed. Since the mapper iterators are kept always pointing to the last MappedEvent they played, this can only happen if we jumped in time into the middle of a note. For each MappedEvent, we decide whether it should sound. For historical reasons, the acceptance logic lives in various places, mostly in acceptEvent, which mostly checks track mutedness and soloing. If accepted, we call doInsert in the mapper. InternalSegmentMapper's doInsert adds some arguments and calls its ChannelManager's doInsert method. ChannelManager::doInsert's main job is to call the inserter's insertCopy method. But first it guarantees that the channel is set up correctly for this note to play on. It uses insertCopy to first send more MappedEvents to accomplish this. The outer loop is simpler: it just runs until no mapper has played a note during this slice (except the metronome mapper) When fillCompositionWithEventsUntil is done, MappedEventInserter has filled a MappedEventList with the MappedEvents that should sound during this slice. They are not neccessarily in time-wise order, though each mapper's events are in order. Then RosegardenSequencer (thru a thread synchronization mechanism that I won't go into) passes the list to Driver::processEventsOut, a virtual function that (always, I think) is really AlsaDriver:: processEventsOut. MappedEvents from notes and MappedEvents from MIDI input both go to Driver. Preview notes also go there via StudioControl (they are made by ImmediateNote). AlsaDriver::processEventsOut first handles any audio events that it finds, then calls processMidiOut. The audio events are still in the MappedEventList, but they are considered handled at that point. processMidiOut loops over the MappedEventList. For each event , it determines what instrument the event is to play on. If the instrument is a MIDI instrument, it determines what port to play thru. processMidiOut then uses a switch statement to determine the MappedEvent's type. If it's a type it handles, it will make a snd_seq_event_t, a type that the library Alsalib declares (it actually creates an partially initializes a snd_seq_event_t object early and just doesn't use it if it gets an unused type) For normal notes, the case is MappedEvent::MidiNoteOneShot, which sets channel, pitch and velocity. After the switch, processMidiOut calls processSoftSynthEventOut for Softsynth events. MIDI events are sent to snd_seq_event_output. We also add a pending noteoff for the note (We ignore the noteoffs that InternalSegmentMapper made). The rest is done by third-party libraries, notably Alsalib, and by your sound hardware. And that's how a note becomes a sound in Rosegarden. |